Why Self-Hosted LLM May Defines the 2026 AI Landscape

TLDR

- Data Sovereignty: Local hosting ensures that sensitive information remains within private infrastructure, meeting the stringent compliance standards of 2026.

- Economic Sustainability: Transitioning from subscription-based APIs to local hardware reduces the marginal cost of token generation to zero for high-volume users.

- Optimization: Users fine-tune models to specific corporate identities or personal workflows without the limitations of external provider guardrails.

- Operational Resilience: Self-hosted models provide low-latency, offline functionality that eliminates dependency on third-party cloud stability and internet connectivity.

The Profound Shift Toward Localized Intelligence in 2026

The digital landscape in 2026 witnesses a monumental transition known as the Cloud Exodus. While centralized AI services initially dominate the market, a strategic pivot toward the self-hosted LLM is now the primary objective for tech-forward enterprises and privacy-conscious individuals in Malaysia and beyond.

As search engines fully evolve into answer engines, the demand for high-fidelity, private, and customizable intelligence becomes a necessity rather than a luxury.

This transition represents the next significant phase of the AI revolution, moving away from generic cloud interfaces toward specialized, local sovereignty. Here’s 5 reasons why Self Hosting is gonna be big in 2026:

1. Data Sovereignty and Radical Privacy Protocols

One of the most compelling arguments for a self-hosted LLM centers on the absolute control of information. In an era where data breaches and unauthorized training on user inputs are prevalent, local hosting offers a secure vault.

When an organization deploys a model like Llama 4 or its successors on its own hardware, the data never traverses the public internet. This architecture is essential for sectors such as healthcare and law, where adherence to evolving data protection regulations is non-negotiable.

By maintaining the model within a private local area network, the risk of proprietary code leakage or personal data exposure is virtually eliminated. Furthermore, this internal processing provides a definitive shield against the invasive data harvesting practices that characterize the broader AI industry.

2. The Macroeconomics of Localized AI Infrastructure

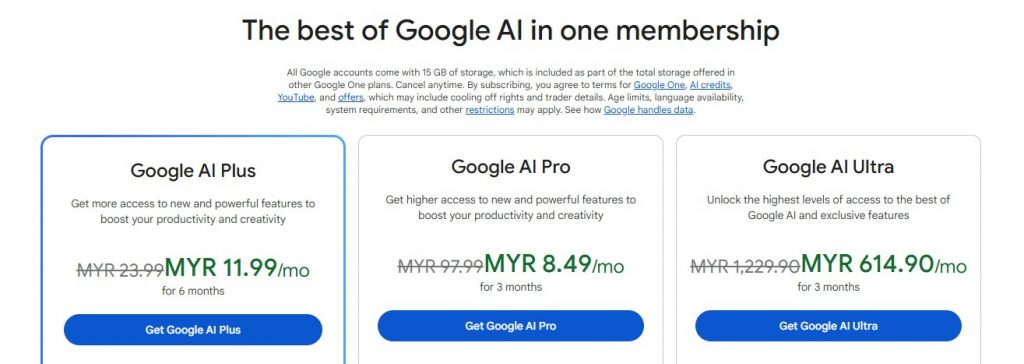

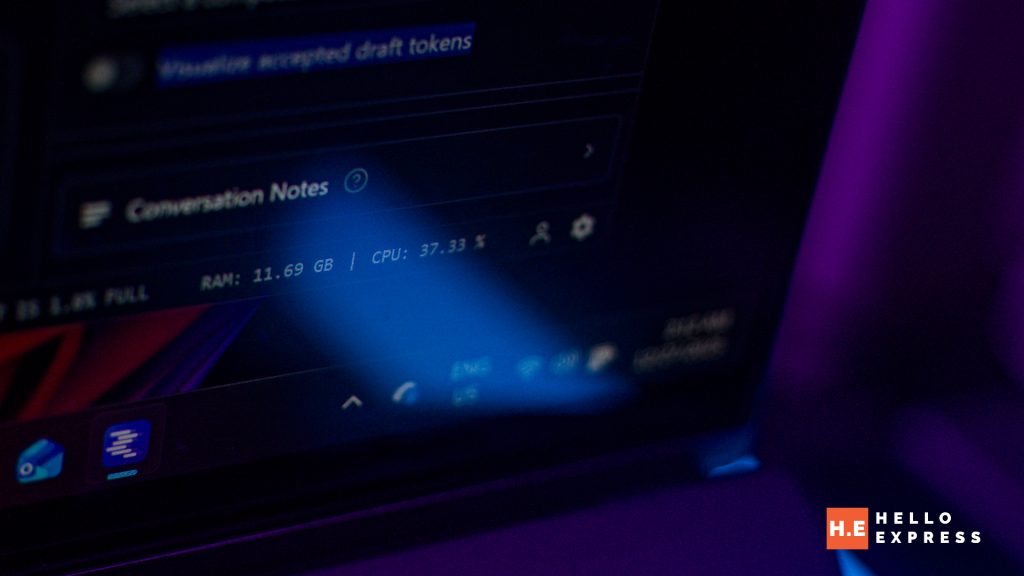

The financial model of artificial intelligence is currently undergoing a radical restructuring. Cloud-based AI operates on a rental paradigm where users pay per token, a variable cost that scales aggressively with high-volume usage. Conversely, a self-hosted LLM operates on a capital expenditure model. Once the initial investment in hardware—such as high-end GPUs or unified memory silicon—is complete, the marginal cost of operation drops to the price of electricity.

For agencies and developers generating millions of words monthly, this shift facilitates predictable budgeting and significant long-term savings. Consequently, enterprises increasingly view local AI hardware not as an expense, but as a critical fixed asset that protects them from the volatile pricing and tier-based restrictions of external API providers.

3. Customization and Fine-Tuning Capability

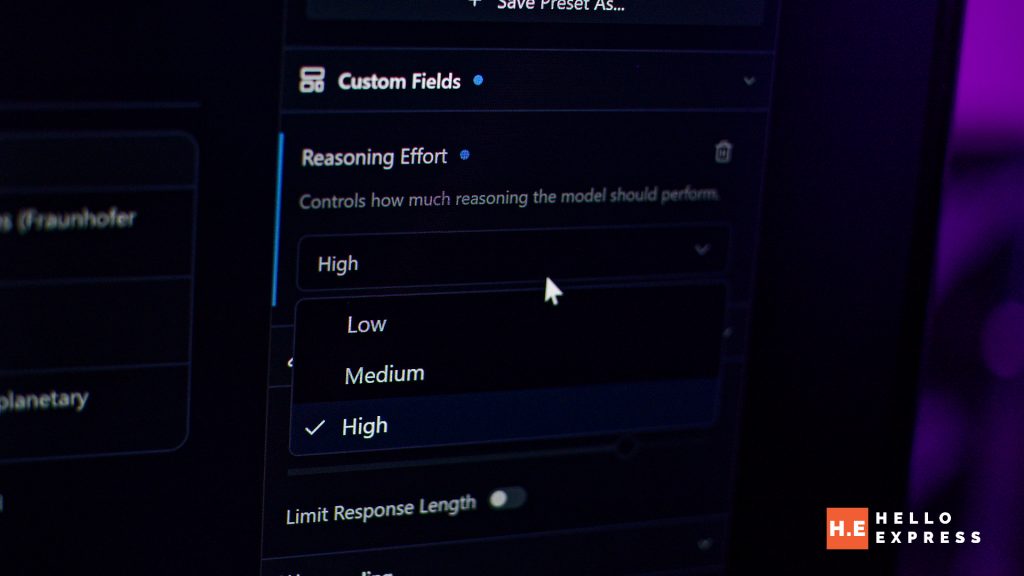

Generic models often suffer from corporate sanitization, leading to responses that lack depth or specific stylistic nuances. A self-hosted LLM allows for deep fine-tuning, which is the process of training a model on a specialized dataset to inherit a specific persona or expertise. This capability enables a model to understand internal company documentation, technical jargon, or a unique creative voice with high precision.

Furthermore, local hosting removes the arbitrary guardrails often imposed by cloud providers, allowing for a more authentic and unfiltered exchange of information tailored to the user’s specific requirements. This level of granular control ensures that the AI aligns perfectly with the unique intellectual property and cultural context of the organization.

4. Operational Autonomy and the Elimination of Latency

Reliability remains a critical bottleneck for cloud-dependent services. Internet outages or server downtime from major providers can paralyze AI-integrated workflows. Localized models provide a robust solution by offering full offline functionality. Furthermore, by processing requests locally, users bypass the latency inherent in round-trip data transmission to distant server farms.

This near-instantaneous response time is crucial for the deployment of real-time coding assistants and voice-activated interfaces that require fluid, human-like interaction speeds. In contrast to cloud services that often lag during peak hours, a local model delivers consistent and predictable performance regardless of external network conditions or bandwidth limitations.

5. The Foundation for Agentic Ecosystems

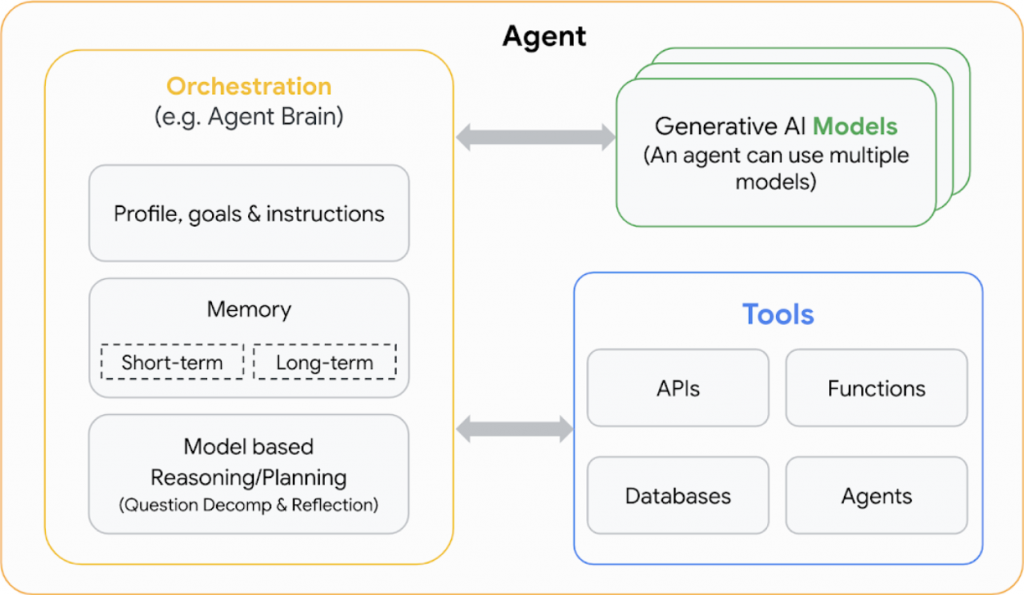

The ultimate realization of AI in 2026 is the move toward autonomous agents. These are systems capable of executing complex tasks such as file management, scheduling, and cross-platform communication without constant human intervention.

Granting such extensive permissions to a cloud-based entity presents a significant security risk. A self-hosted LLM acts as a private orchestrator, providing a secure foundation where an agent can operate with full access to a user’s digital life without external exposure.

This local hub becomes the brain of a personal or corporate digital ecosystem, managing tasks with a level of trust that centralized services cannot replicate. Ultimately, the rise of agentic AI necessitates a move toward local hosting to ensure these powerful tools remain under the direct supervision and ownership of the user.

Conclusion: The Future of Autonomous Intelligence

As we progress through 2026, the era of total dependency on centralized AI giants is coming to a close. The move toward the self-hosted LLM is more than just a technical preference; it is a fundamental shift toward digital independence and security. By reclaiming control over their computational resources, individuals and businesses are fostering a more resilient and innovative AI ecosystem. The ability to run high-performance models on local hardware democratizes access to elite intelligence, ensuring that the current wave of AI development is defined by privacy, efficiency, and absolute sovereignty.

Recommended Self-Hosting Platforms

To begin your journey into localized AI, the following platforms offer the most robust and user-friendly environments for deploying a self-hosted LLM in 2026:

- Ollama: The premier tool for developers and terminal enthusiasts, offering simple one-line commands to run and manage models. https://ollama.com/

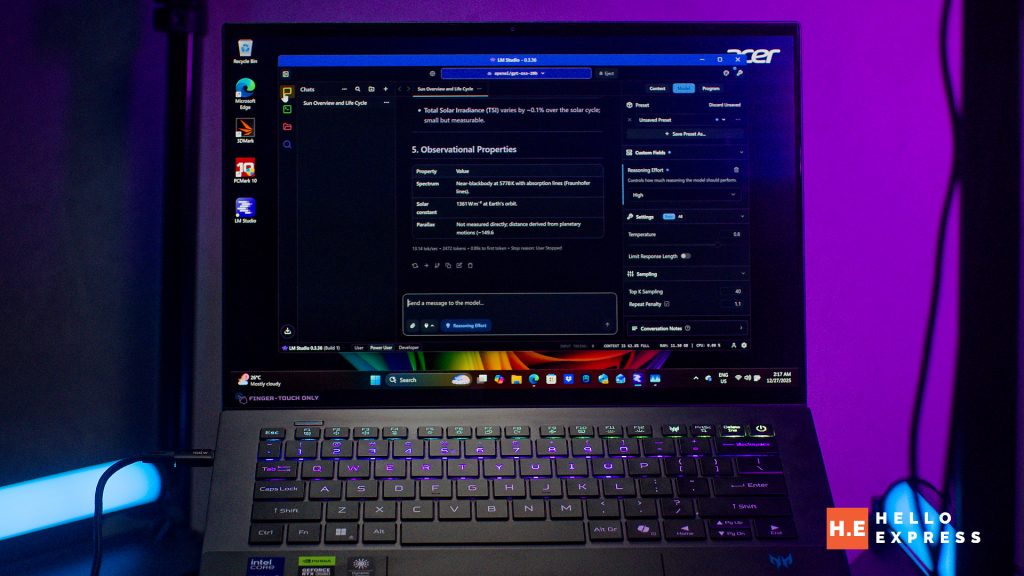

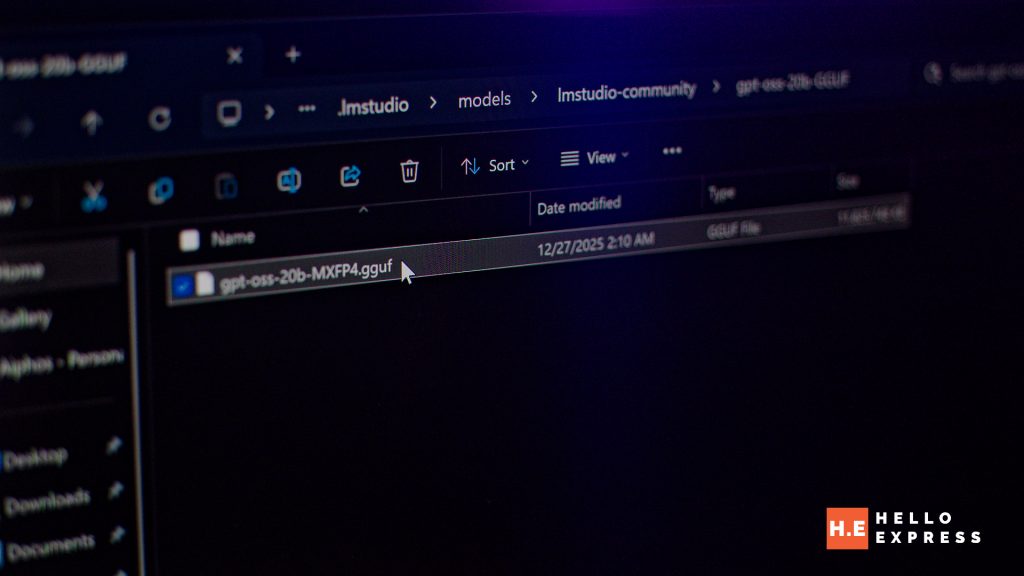

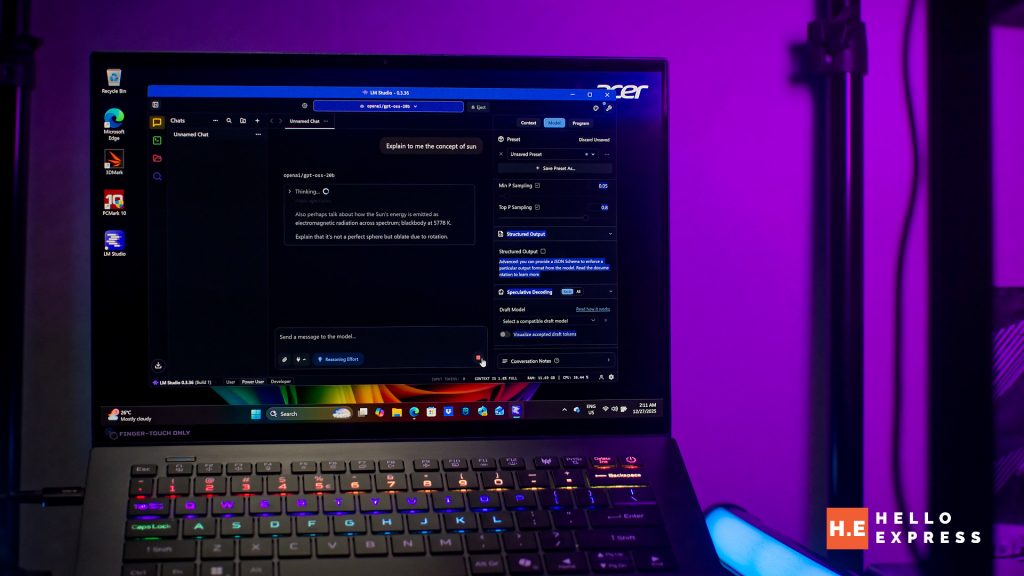

- LM Studio: An intuitive graphical interface designed for discovering, downloading, and chatting with local models without needing technical expertise. https://lmstudio.ai/

- Jan: A privacy-first, open-source alternative that provides a clean desktop experience for running models 100 percent offline. https://www.jan.ai/