NVIDIA Introduces Spectrum-XGS Ethernet to Link Distributed Data Centers into Giga-Scale AI Super-Factories

TL;DR:

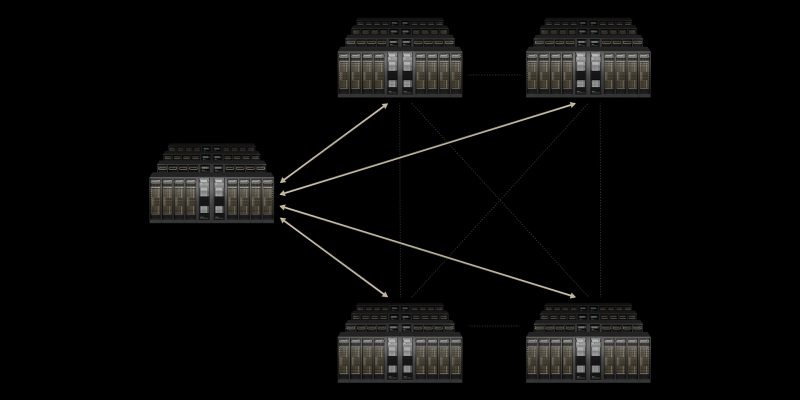

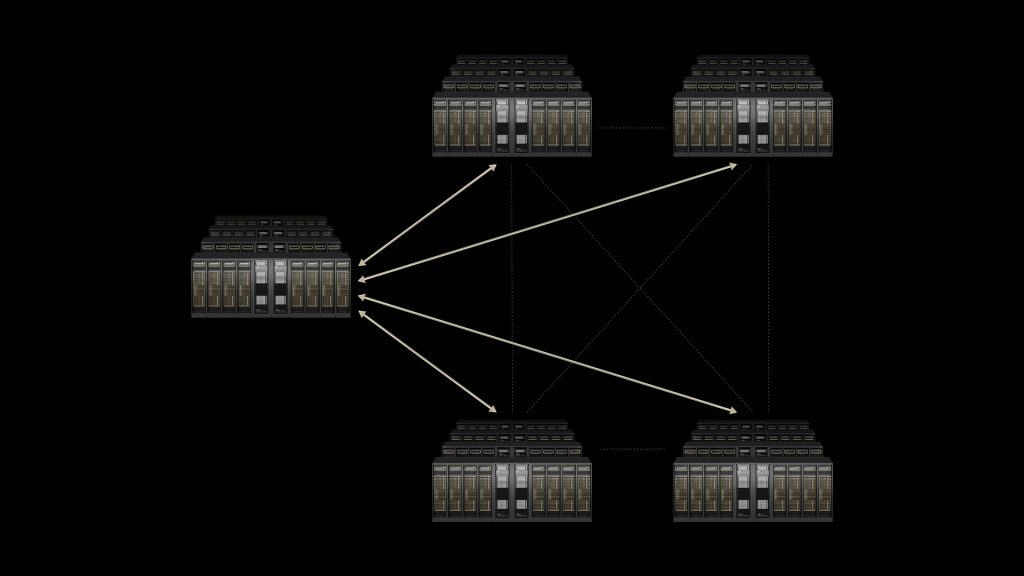

- NVIDIA has unveiled NVIDIA® Spectrum-XGS Ethernet, a new “scale-across” technology that combines multiple, geographically distributed data centers into a single, unified AI super-factory.

- This technology is a breakthrough addition to the NVIDIA Spectrum-X™ Ethernet platform, designed to overcome the physical limitations of single data centers.

- Spectrum-XGS Ethernet features advanced algorithms, distance congestion control, and precision latency management to nearly double the performance of multi-GPU and multi-node communication over long distances.

- CoreWeave, a leading AI infrastructure provider, will be one of the first to deploy the technology, connecting its data centers to form a unified supercomputer.

- The technology is available now as part of the NVIDIA Spectrum-X Ethernet platform.

NVIDIA’s “Scale-Across” Technology Creates Unified, Giga-Scale AI Super-Factories

HOT CHIPS — In a major announcement today, NVIDIA introduced NVIDIA® Spectrum-XGS Ethernet, a groundbreaking technology that allows for the creation of “giga-scale AI super-factories” by seamlessly linking distributed data centers. This innovation addresses the growing challenge of power and capacity limits within a single data center, which can hinder the scaling of AI workloads.

“The AI industrial revolution is here, and giant-scale AI factories are the essential infrastructure,” said Jensen Huang, founder and CEO of NVIDIA. “With NVIDIA Spectrum-XGS Ethernet, we add scale-across to scale-up and scale-out capabilities to link data centers across cities, nations and continents into vast, giga-scale AI super-factories.”

Overcoming Traditional Network Limitations

Traditional off-the-shelf Ethernet networking is often insufficient for interconnecting geographically distributed data centers due to high latency, jitter, and unpredictable performance. Spectrum-XGS Ethernet solves this problem by integrating advanced algorithms that dynamically adapt the network to the distance between facilities.

The technology features auto-adjusted distance congestion control and precision latency management, which work to accelerate multi-GPU and multi-node communication. As a result, Spectrum-XGS Ethernet nearly doubles the performance of the NVIDIA Collective Communications Library, enabling multiple data centers to operate as a single, fully optimized AI super-factory.

CoreWeave to Be a First Adopter

Hyperscale pioneers are already embracing this new infrastructure. CoreWeave, a specialized AI cloud provider, will be among the first to deploy NVIDIA Spectrum-XGS Ethernet.

“With NVIDIA Spectrum-XGS, we can connect our data centers into a single, unified supercomputer, giving our customers access to giga-scale AI that will accelerate breakthroughs across every industry,” said Peter Salanki, cofounder and chief technology officer of CoreWeave.

Spectrum-XGS Ethernet is available now as part of the NVIDIA Spectrum-X Ethernet platform, which also includes NVIDIA Spectrum-X switches and NVIDIA ConnectX®-8 SuperNICs, all designed to deliver seamless scalability and ultralow latency for the most demanding AI workloads.